Student Perspective

Using the student perspective involves gathering information from students. In addition to Student Experience Surveys, instructors might also collect and analyze information from mid-semester student surveys, pre/post-testing to measure outcomes, and instructor-created or research-based surveys.

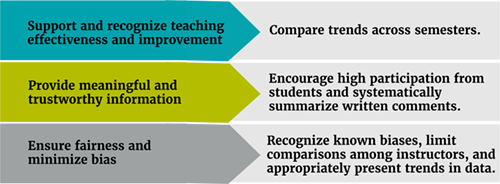

Using the student perspective can achieve important goals (below left) via robust practices (below right).

Click for a text version of the Student Perspective Tips graphic. (PDF)

Decision Guide

Here are guiding questions departments can consider as they develop processes for using Student Experience Surveys (SES). Click on each title to expand (or collapse) each box.

Standardizing the ways SES data are collected, analyzed, and interpreted will help ensure fairness among faculty and produce more trustworthy information for evaluations. To standardize, consider:

- What additional questions, if any, will your department add to UGA’s mandatory Student Experience Survey?

- How will your department encourage high response rates from students while maintaining their confidentiality?

- How will your department support faculty in analyzing and reporting quantitative data

appropriately?

For example, response options like strongly agree, agree, neutral, etc. are ordinal and should be analyzed as distributions, rather than averages. - How will your department support faculty in summarizing and reporting student comments

systematically?

Responses should be summarized and trends identified. Comments should not be cherry-picked for specific use. - Which SES results will your department expect faculty to report on for annual review? For promotion?

Inequities can result from biases in SES data. Biases can arise in SES ratings based on:

- instructor characteristics (e.g., race & ethnicity, gender, age, nation of origin)

- course characteristics (e.g., upper versus lower division, lab versus “lecture”)

- student characteristics (e.g., major, year in school, performance in the course),and

- other contextual factors (e.g., discipline, fall versus spring semester)

As a result of these known biases, comparisons across instructors are not valid. We cannot conclude that differences in SES ratings across instructors result from differences in teaching effectiveness. Instead, comparisons of SES ratings for the same instructor in the same course provide valuable information about growth.

To help minimize bias, consider the following questions:

- How will you ensure that instructors are compared only to themselves?

- How will you ensure that faculty discussions about data from SES focus on comparisons of instructors to themselves, not cross-instructor comparisons, and that the potential for biases are kept in mind?

- How will you educate department members about the appropriate ways to analyze and interpret student experience survey data?

Designing processes to document change recognizes effort toward improvement, rather than just excellence:

- What items from the Student Experience Survey will you use to document change over time?

Student experience surveys provide just one source of information from students. Mid-semester formative student experience surveys, research-based surveys, instructor-generated surveys, and student interviews can all be effectively used to learn about students’ experiences and outcomes.

- What additional student data will the department encourage faculty to collect?

- What departmental data about student learning outcomes could be leveraged for teaching evaluation?

Departmental Quick Start Guide

Additional Resources

Guidance for appropriate use of data from students

- Big-picture evidence-based considerations about SES data (PDF) (University of Colorado Boulder Faculty Assembly)

- Interpreting & Responding to Student Evaluations of Teaching (PDF) (University of Georgia)

- Interpreting & Using Student Ratings Data (Linse, 2017)

- Guide for Advancing Teaching Evaluation, Student Voice (PDF) (University of Georgia)

- Requesting Student Letters, Template (.docx) (University of Colorado Boulder)

- Surveying Students for Feedback (Georgia Tech)

Example questions to include on student experience surveys

- Student Course Survey Items (University of Arizona)

- Evaluation of Teaching Items in Course Evaluations (Boise State)

Increase response rates and elicit constructive student feedback

- Strategies to Increase Student Response Rates (Vanderbilt University)

- Top 20 Strategies to Increase the Online Response Rate (PDF) (Berk, 2012)

- Advice for Students: Providing Helpful Feedback to your Instructors (PDF) (University of Michigan)

Selected papers demonstrating bias in student evaluations

- Gender Bias in Student Evaluations of Teaching (Adams et al., 2021)

- Unbiased, Reliable, and Valid Student Evaluations can Still be Unfair (Esarey & Valdes, 2020)

- Gender and Cultural Bias in Student Evaluations (Fan et al., 2019)

- Examining Student Evaluations of Black College Faculty (Smith & Hawkins, 2011)